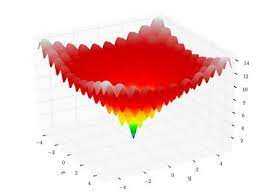

Continuous optimization means finding the minimum or maximum value of a function of one or many real variables, subject to constraints. The constraints usually take the form of equations or inequalities. Continuous optimization has been the subject of study by mathematicians since Newton, Lagrange and Bernoulli.

- What is continuous variable in optimization?

- What is discrete and continuous optimization?

- What is optimizing a function?

- What are the two types of optimization?

What is continuous variable in optimization?

Continuous optimization is a branch of optimization in applied mathematics. As opposed to discrete optimization, the variables used in the objective function are required to be continuous variables—that is, to be chosen from a set of real values between which there are no gaps (values from intervals of the real line).

What is discrete and continuous optimization?

In discrete optimization, some or all of the variables in a model are required to belong to a discrete set; this is in contrast to continuous optimization in which the variables are allowed to take on any value within a range of values.

What is optimizing a function?

Mathematically speaking, optimization is the minimization or maximization of a function subject to constraints on its variables.

What are the two types of optimization?

We can distinguish between two different types of optimization methods: Exact optimization methods that guarantee finding an optimal solution and heuristic optimization methods where we have no guarantee that an optimal solution is found.

Howtosignalprocessing

Howtosignalprocessing